The evolution of modern technology has made it critical to understand the components that power our devices. Two of the most essential components in computing are the Central Processing Unit (CPU) and the Graphics Processing Unit (GPU). Although both play crucial roles, they are designed for different tasks and perform in unique ways. Let's dive into the essential differences and applications of CPUs and GPUs.

Definitions and Basic Functions

CPU: The Central Processing Unit, often referred to as the "brain" of the computer, handles general-purpose tasks and instructions. It is responsible for executing programs, performing calculations, and managing data flow within the system.

GPU: The Graphics Processing Unit specializes in handling graphical data. It is optimized for rendering images, videos, and animations, making it essential for tasks requiring high-speed image processing. However, its utility extends beyond graphics and is also instrumental in tasks like deep learning and complex computations.

Core Differences

Here are the primary differences between CPUs and GPUs:

| Feature | CPU | GPU |

|---|---|---|

| Primary Function | General-purpose computing | Graphics rendering and parallel processing |

| Core Structure | Fewer, more powerful cores | Thousands of smaller, efficient cores |

| Performance | Better for single-threaded tasks | Better for multi-threaded, parallel tasks |

| Flexibility | Versatile but not specialized | Highly specialized for specific tasks |

| Power Consumption | Generally lower | Higher due to increased core count |

Architectural Differences

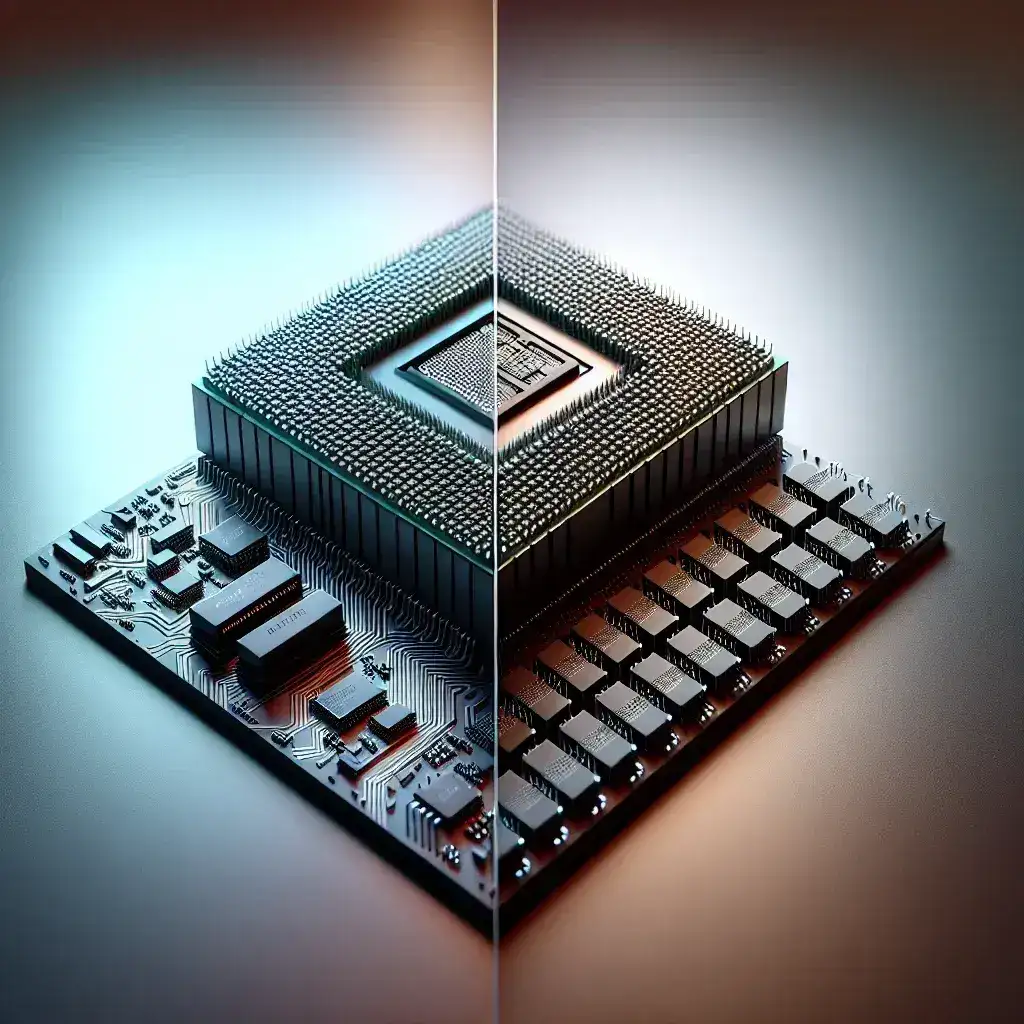

CPU Architecture

CPUs generally consist of a few powerful cores designed to handle a wide variety of tasks. These cores operate at high speeds and are optimized for sequential processing. Each core is also equipped to manage multiple instruction sets, making them versatile for running diverse applications. CPUs often feature advanced technologies like hyper-threading to improve efficiency.

GPU Architecture

GPUs, in contrast, comprise thousands of smaller, simpler cores that work in parallel. This architecture makes them particularly efficient for tasks that can be broken down into smaller, simultaneous operations. For instance, rendering pixels on the screen or performing complex matrix multiplications in machine learning are tasks where GPUs excel. Modern GPUs use techniques like tensor cores to further speed up specific computations.

Applications

When to Use CPUs

- General Computing: For everyday tasks such as web browsing, office applications, and basic media consumption, a CPU is well-suited and adequate.

- Sequential Processes: Jobs requiring sequential processing, like certain data analysis tasks, are more efficiently handled by CPUs.

- System Management: Managing I/O operations, memory, and other peripherals is best handled by the CPU, given its versatility in managing various tasks.

When to Use GPUs

- Graphics Rendering: Ideal for gaming, video editing, and any application that requires high-speed rendering of graphics.

- Parallel Computing: Tasks like scientific simulations, financial modeling, and machine learning benefit from the GPU's ability to handle multiple operations simultaneously.

- Deep Learning: Training neural networks, a task involving extensive matrix operations, is significantly accelerated by the use of GPUs.

Performance Metrics

Performance metrics for CPUs and GPUs vary considerably due to their distinct architectures and purposes. Here are some common metrics used for evaluation:

CPU Metrics

- Clock Speed: Typically measured in GHz, indicating how many cycles a CPU can perform per second.

- Core Count: The number of cores determines the multi-threading capabilities of the CPU.

- Instructions Per Cycle (IPC): This metric shows how many instructions a CPU can execute in a single cycle, influencing its overall performance.

GPU Metrics

- CUDA Cores: For NVIDIA GPUs, the number of CUDA cores indicates the processing power, with more cores generally offering better performance.

- Memory Bandwidth: The rate at which data can be read from or stored into memory by the GPU, commonly measured in GB/s.

- TFLOPS: The number of Tera Floating-Point Operations per Second, a measure of the GPU's computational power.

Future Trends

The landscape of CPUs and GPUs continues to evolve, driven by advancements in technology and growing demands. Hybrid models combining CPU and GPU capabilities, known as Accelerated Processing Units (APUs), are gaining popularity.

Innovations in CPUs

- AI Integration: Increasingly, CPUs are integrating specialized AI accelerators for improved machine learning performance.

- Smaller Nodes: Transitioning to smaller semiconductor nodes (e.g., 7nm, 5nm) improves performance and energy efficiency.

Innovations in GPUs

- Ray Tracing: Real-time ray tracing capabilities are enhancing the realism in gaming and graphics applications.

- Enhanced Parallelism: Continued improvements in architectures to boost parallel processing capacities, particularly for AI and machine learning tasks.

Conclusion

Understanding the differences between CPUs and GPUs is essential for optimizing performance based on your specific needs. While CPUs are versatile and handle a broad range of tasks, GPUs excel in parallel processing and specialized computations. Whether you're setting up a gaming rig, diving into deep learning, or simply looking to improve your everyday computing, knowing when and how to leverage each component can make all the difference.